Michael Winner may be a good food critic, but if you were looking for someone to cook you the finest meal for your budget, I doubt he would be your first choice.Same with film critics, they may be able to write an insightful and critical review, but would you want them directing a film for your budget? Would you want Jakob Neilsen, who is essentially a usability critic, to design your website? I mean, take a look at his site!

When you are building a product, you get a usability company in because you know that usability is a good thing that you want to have. If usability companies are the critics, what are you expecting?

The first usability test I ran was in 1991. I’ve set up usability labs, I’ve observed hundreds of people interacting with technology and products. My passion has always to do things at speed, turn around results ASAP and engage all stakeholders in the process. But I’ll talk about that in a later post. For now I’ll draw on experience of working with organisations that have commissioned usability companies to review their products. I’ll breakdown the process I have often observed from usability testing vendors, considering both the elapsed time and the actual ‘value added time’ taken.

Day one

The client (usually the business) engage the usability company to audit the usability of the product that is being developed. The consultants will come in and understand the user tasks, roles and goals; the target audience will be identified for recruitment. ‘Value added time’ = 1 hour.

Day two

The team go away and produce a test plan and a recruitment brief for a research agency to find participants. They promise to get it back to the client in a couple of days. They contact their preferred agency who set about recruiting people (let’s assume this is a simple brief for a retail website targeted at young mothers). Produce test plan (value added time = 3 hours). Send to client for review.

Day three

Client return test plan with a few comments. Update test plan. Value added time = 30 minutes.

Days six-ten

Twelve usability sessions, each an hour long, they do three a day, that is four days of testing. Value added time = 12 hours

Days eleven – thirteen

The team spend three days analysising and synthesising the results, pull supporting video clips and produce a detailed report. Value added time – 15 hours

Day fourteen

The client sees the report for the first time. (Value added time = 2 hours). Interesting results. (IT representation were not invited, they did not commission the report, the product owner wants to see the output first before sharing it with IT).

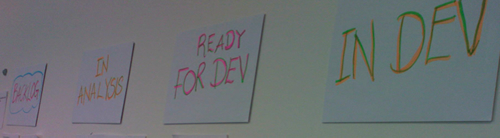

Day sixteen

The product owner informs the dev team of the changes that need to be made in the light of the usability report. Project manager sucks air through his teeth and says “you’ll need to raise a change request for those items… ha! quick wins they say? hardly… Hmmm, OK, change the labels in the field, we should be able to do that…”

Value added Vs. Elapsed time

The usability company has delivered and their engagement is complete. From the start of the process to the recommendations hitting the developers who must ultimately action these, for this not-too-fictitious scenario sixteen days have passed, of which only four were spent on value-added tasks, actually doing stuff.

Day n

The product goes live. The usability company are aghast that so many of the changes they reccomended have not been implemented. They place the blame fairly and sqaurely at the door of the developers and reinforce their belief that IT just doesn’t listen, or worse, care about usability. The critics have critcised from their armchair, like the pigs and chickens they are the chickens, participated not committed.

Usability rant part 2>